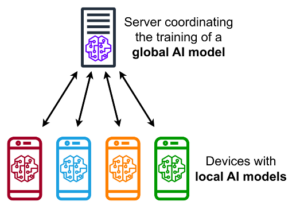

One of the issues in reinforcement learning (RL) is generalization to different environments. For instance, an autonomous vehicle model trained in a particular map may not perform in another region, or an RL-trained camera-based AGV may not perform well with environmental disturbances, e.g. lens dirt, low lighting. Classical RL approaches tackle this issue by introducing the agent to environment variants serially, resulting in drastically high training times for a large number of variants. Alternatively, federated reinforcement learning can be used to leverage parallel computing for efficient and robust training of RL agents. Federated learning (Fig. 1) maintains a parallel and decentralized training architecture, wherein each “edge agent” trains a local AI model, periodically synchronized with a central model by exchanging hyper-parameters. This ensures a) data privacy, 2) reduced network usage, and 3) data heterogeneity achieved through diverse yet simultaneous training.

Figure 1: Example architecture of Federated Learning [1]

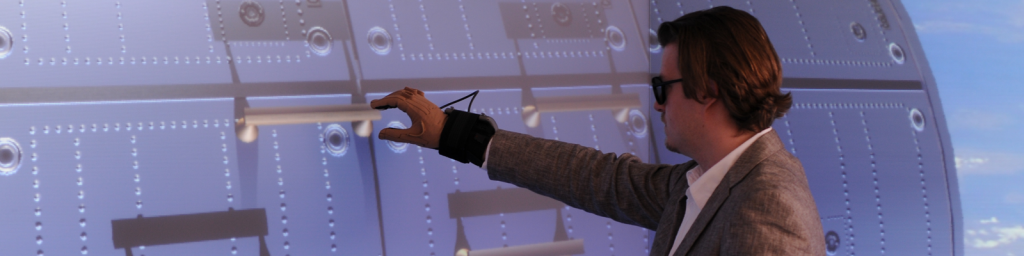

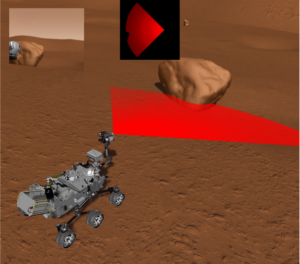

This thesis will develop a federated reinforcement learning framework for a “Mars Rover”, where several digital twin of an autonomous rover shall be deployed in varied virtual environments of extra-terrestrial surfaces (see Figure 2), so that the trained net is robust to environment variations. The thesis will compose the following steps:

- Development of a strategy for parameterization of the environment digital twin (e.g. type and quantity of variations)

- Development and integration of a federated learning framework within the virtual testbed

- Comparison of results with classical approaches.

The development platform is the simulation software VEROSIM and the parallel virtual testbeds developed at MMI. A good knowledge of Python, C++ and machine learning fundamentals is recommended.

Figure 2: Camera and radar based navigation of the Mars Rover Model

Supervisor: Maqbool