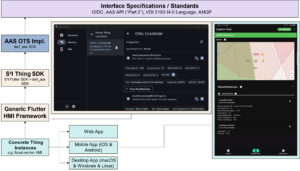

Human participation in Industry 4.0 processes remains essential — whether for oversight, manual control, or approval. To support this, a generic Flutter-based HMI SDK has been developed that allows the creation of flexible and reusable Human-Machine Interfaces connected to AAS-based Digital Twins.

While this SDK enables rapid prototyping and deployment of custom HMIs, creating process-specific variants still involves manual coding and configuration. With the emergence of Large Language Models (LLMs), a new paradigm becomes possible: text-driven HMI generation.

This thesis investigates how LLMs can be utilized to generate tailored HMI implementations based on a textual description of process behavior, roles, and required interactions. The goal is to enable developers or domain experts to rapidly prototype and deploy process-specific HMIs by describing their functionality in natural language.

The work involves analyzing and optimizing the existing Flutter SDK structure to be compatible with LLM-based code generation, defining reusable patterns and interface templates, and exploring methods for automating HMI customization and integration with AAS Submodels.

Key tasks include:

-

Analysis and restructuring of the Flutter-based HMI SDK for LLM-assisted code generation

-

Development of prompt strategies and HMI specification templates for different I4.0 interaction scenarios

-

Implementation of a prototype assistant that generates and deploys HMI components based on text input

-

Evaluation of LLM output quality, modularity, and runtime integration with AAS-based backends

-

Demonstration of generated HMIs in example I4.0 processes including human-in-the-loop tasks

Supervisor: Bektas